Science of photography

This article needs additional citations for verification. (January 2019) |

The science of photography is the use of chemistry and physics in all aspects of photography. This applies to the camera, its lenses, physical operation of the camera, electronic camera internals, and the process of developing film in order to take and develop pictures properly.[1]

Optics

[edit]Camera obscura

[edit]

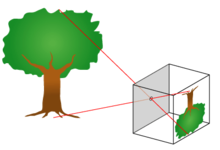

The fundamental technology of most photography, whether digital or analog, is the camera obscura effect and its ability to transform of a three dimensional scene into a two dimensional image. At its most basic, a camera obscura consists of a darkened box, with a very small hole in one side, which projects an image from the outside world onto the opposite side. This form is often referred to as a pinhole camera.

When aided by a lens, the hole in the camera doesn't have to be tiny to create a sharp and distinct image, and the exposure time can be decreased, which allows cameras to be handheld.

Lenses

[edit]A photographic lens is usually composed of several lens elements, which combine to reduce the effects of chromatic aberration, coma, spherical aberration, and other aberrations. A simple example is the three-element Cooke triplet, still in use over a century after it was first designed, but many current photographic lenses are much more complex.

Using a smaller aperture can reduce most, but not all aberrations. They can also be reduced dramatically by using an aspheric element, but these are more complex to grind than spherical or cylindrical lenses. However, with modern manufacturing techniques the extra cost of manufacturing aspherical lenses is decreasing, and small aspherical lenses can now be made by molding, allowing their use in inexpensive consumer cameras. Fresnel lenses are not common in photography are used in some cases due to their very low weight.[3] The recently developed Fiber-coupled monocentric lens consists of spheres constructed of concentric hemispherical shells of different glasses tied to the focal plane by bundles of optical fibers.[4] Monocentric lenses are also not used in cameras because the technology was just debuted in October 2013 at the Frontiers in Optics Conference in Orlando, Florida.

All lens design is a compromise between numerous factors, not excluding cost. Zoom lenses (i.e. lenses of variable focal length) involve additional compromises and therefore normally do not match the performance of prime lenses.

When a camera lens is focused to project an object some distance away onto the film or detector, the objects that are closer in distance, relative to the distant object, are also approximately in focus. The range of distances that are nearly in focus is called the depth of field. Depth of field generally increases with decreasing aperture diameter (increasing f-number). The unfocused blur outside the depth of field is sometimes used for artistic effect in photography. The subjective appearance of this blur is known as bokeh.

If the camera lens is focused at or beyond its hyperfocal distance, then the depth of field becomes large, covering everything from half the hyperfocal distance to infinity. This effect is used to make "focus free" or fixed-focus cameras.

Aberration

[edit]Aberrations are the blurring and distorting properties of an optical system. A high quality lens will produce a smaller amount of aberrations.

Spherical aberration occurs due to the increased refraction of light rays that occurs when rays strike a lens, or a reflection of light rays that occurs when rays strike a mirror near its edge in comparison with those that strike nearer the center. This is dependent on the focal length of a spherical lens and the distance from its center. It is compensated by designing a multi-lens system or by using an aspheric lens.

Chromatic aberration is caused by a lens having a different refractive index for different wavelengths of light and the dependence of the optical properties on color. Blue light will generally bend more than red light. There are higher order chromatic aberrations, such as the dependence of magnification on color. Chromatic aberration is compensated by using a lens made out of materials carefully designed to cancel out chromatic aberrations.

Curved focal surface is the dependence of the first order focus on the position on the film or CCD. This can be compensated with a multiple lens optical design, but curving the film has also been used.

Focus

[edit]

Focus is the tendency for light rays to reach the same place on the image sensor or film, independent of where they pass through the lens. For clear pictures, the focus is adjusted for distance, because at a different object distance the rays reach different parts of the lens with different angles. In modern photography, focusing is often accomplished automatically.

The autofocus system in modern SLRs use a sensor in the mirrorbox to measure contrast. The sensor's signal is analyzed by an application-specific integrated circuit (ASIC), and the ASIC tries to maximize the contrast pattern by moving lens elements. The ASICs in modern cameras also have special algorithms for predicting motion, and other advanced features.

Diffraction limit

[edit]Since light propagates as waves, the patterns it produces on the film are subject to the wave phenomenon known as diffraction, which limits the image resolution to features on the order of several times the wavelength of light. Diffraction is the main effect limiting the sharpness of optical images from lenses that are stopped down to small apertures (high f-numbers), while aberrations are the limiting effect at large apertures (low f-numbers). Since diffraction cannot be eliminated, the best possible lens for a given operating condition (aperture setting) is one that produces an image whose quality is limited only by diffraction. Such a lens is said to be diffraction limited.

The diffraction-limited optical spot size on the CCD or film is proportional to the f-number (about equal to the f-number times the wavelength of light, which is near 0.0005 mm), making the overall detail in a photograph proportional to the size of the film, or CCD divided by the f-number. For a 35 mm camera with f/11, this limit corresponds to about 6,000 resolution elements across the width of the film (36 mm / (11 * 0.0005 mm) = 6,500.

The finite spot size caused by diffraction can also be expressed as a criterion for distinguishing distant objects: two distant point sources can only produce separate images on the film or sensor if their angular separation exceeds the wavelength of light divided by the width of the open aperture of the camera lens.

Chemical processes

[edit]This section needs expansion. You can help by adding to it. (August 2020) |

Gelatin silver

[edit]The gelatin silver process is the most commonly used chemical process in black-and-white photography, and is the fundamental chemical process for modern analog color photography. As such, films and printing papers available for analog photography rarely rely on any other chemical process to record an image.

Daguerreotypes

[edit]Daguerreotype (/dəˈɡɛər(i.)əˌtaɪp, -(i.)oʊ-/ ;[5][6] French: daguerréotype) was the first publicly available photographic process; it was widely used during the 1840s and 1850s. "Daguerreotype" also refers to an image created through this process.

Collodion process and the ambrotype

[edit]The collodion process is an early photographic process. The collodion process, mostly synonymous with the "collodion wet plate process", requires the photographic material to be coated, sensitized, exposed and developed within the span of about fifteen minutes, necessitating a portable darkroom for use in the field. Collodion is normally used in its wet form, but can also be used in dry form, at the cost of greatly increased exposure time. The latter made the dry form unsuitable for the usual portraiture work of most professional photographers of the 19th century. The use of the dry form was therefore mostly confined to landscape photography and other special applications where minutes-long exposure times were tolerable.

Cyanotypes

[edit]Cyanotype is a photographic printing process that produces a cyan-blue print. Engineers used the process well into the 20th century as a simple and low-cost process to produce copies of drawings, referred to as blueprints. The process uses two chemicals: ferric ammonium citrate and potassium ferricyanide.

Platinum and palladium processes

[edit]Platinum prints, also called platinotypes, are photographic prints made by a monochrome printing process involving platinum.

Gum bichromate

[edit]Gum bichromate is a 19th-century photographic printing process based on the light sensitivity of dichromates. It is capable of rendering painterly images from photographic negatives. Gum printing is traditionally a multi-layered printing process, but satisfactory results may be obtained from a single pass. Any color can be used for gum printing, so natural-color photographs are also possible by using this technique in layers.

C-prints and color film

[edit]A chromogenic print, also known as a C-print or C-type print,[7] a silver halide print,[8] or a dye coupler print,[9] is a photographic print made from a color negative, transparency or digital image, and developed using a chromogenic process.[10] They are composed of three layers of gelatin, each containing an emulsion of silver halide, which is used as a light-sensitive material, and a different dye coupler of subtractive color which together, when developed, form a full-color image.[9][10][11]

Digital sensors

[edit]This section needs expansion. You can help by adding to it. (August 2020) |

An image sensor or imager is a sensor that detects and conveys information used to make an image. It does so by converting the variable attenuation of light waves (as they pass through or reflect off objects) into signals, small bursts of current that convey the information. The waves can be light or other electromagnetic radiation. Image sensors are used in electronic imaging devices of both analog and digital types, which include digital cameras, camera modules, camera phones, optical mouse devices,[1][2][3] medical imaging equipment, night vision equipment such as thermal imaging devices, radar, sonar, and others. As technology changes, electronic and digital imaging tends to replace chemical and analog imaging.

Practical applications

[edit]Law of reciprocity

[edit]- Exposure ∝ Aperture Area × Exposure Time × Scene Luminance

The law of reciprocity describes how light intensity and duration trade off to make an exposure—it defines the relationship between shutter speed and aperture, for a given total exposure. Changes to any of these elements are often measured in units known as "stops"; a stop is equal to a factor of two.

Halving the amount light exposing the film can be achieved either by:

- Closing the aperture by one stop

- Decreasing the shutter time (increasing the shutter speed) by one stop

- Cutting the scene lighting by half

Likewise, doubling the amount of light exposing the film can be achieved by the opposite of one of these operations.

The luminance of the scene, as measured on a reflected light meter, also affects the exposure proportionately. The amount of light required for proper exposure depends on the film speed; which can be varied in stops or fractions of stops. With either of these changes, the aperture or shutter speed can be adjusted by an equal number of stops to get to a suitable exposure.

Light is most easily controlled through the use of the camera's aperture (measure in f-stops), but it can also be regulated by adjusting the shutter speed. Using faster or slower film is not usually something that can be done quickly, at least using roll film. Large format cameras use individual sheets of film and each sheet could be a different speed. Also, if you're using a larger format camera with a polaroid back, you can switch between backs containing different speed polaroids. Digital cameras can easily adjust the film speed they are simulating by adjusting the exposure index, and many digital cameras can do so automatically in response to exposure measurements.

For example, starting with an exposure of 1/60 at f/16, the depth-of-field could be made shallower by opening up the aperture to f/4, an increase in exposure of 4 stops. To compensate, the shutter speed would need to be increased as well by 4 stops, that is, adjust exposure time down to 1/1000. Closing down the aperture limits the resolution due to the diffraction limit.

The reciprocity law specifies the total exposure, but the response of a photographic material to a constant total exposure may not remain constant for very long exposures in very faint light, such as photographing a starry sky, or very short exposures in very bright light, such as photographing the sun. This is known as reciprocity failure of the material (film, paper, or sensor).

Motion blur

[edit]Motion blur is caused when either the camera or the subject moves during the exposure. This causes a distinctive streaky appearance to the moving object or the entire picture (in the case of camera shake).

Motion blur can be used artistically to create the feeling of speed or motion, as with running water. An example of this is the technique of "panning", where the camera is moved so it follows the subject, which is usually fast moving, such as a car. Done correctly, this will give an image of a clear subject, but the background will have motion blur, giving the feeling of movement. This is one of the more difficult photographic techniques to master, as the movement must be smooth, and at the correct speed. A subject that gets closer or further away from the camera may further cause focusing difficulties.

Light trails are another photographic effect where motion blur is used. Photographs of the lines of light visible in long exposure photos of roads at night are one example of the effect.[12] This is caused by the cars moving along the road during the exposure. The same principle is used to create star trail photographs.

Generally, motion blur is something that is to be avoided, and this can be done in several different ways. The simplest way is to limit the shutter time so that there is very little movement of the image during the time the shutter is open. At longer focal lengths, the same movement of the camera body will cause more motion of the image, so a shorter shutter time is needed. A commonly cited rule of thumb is that the shutter speed in seconds should be about the reciprocal of the 35 mm equivalent focal length of the lens in millimeters. For example, a 50 mm lens should be used at a minimum speed of 1/50 sec, and a 300 mm lens at 1/300 of a second. This can cause difficulties when used in low light scenarios, since exposure also decreases with shutter time.

Motion blur due to subject movement can usually be prevented by using a faster shutter speed. The exact shutter speed will depend on the speed at which the subject is moving. For example, a very fast shutter speed will be needed to "freeze" the rotors of a helicopter, whereas a slower shutter speed will be sufficient to freeze a runner.

A tripod may be used to avoid motion blur due to camera shake. This will stabilize the camera during the exposure. A tripod is recommended for exposure times more than about 1/15 seconds. There are additional techniques which, in conjunction with use of a tripod, ensure that the camera remains very still. These may employ use of a remote actuator, such as a cable release or infrared remote switch to activate the shutter, so as to avoid the movement normally caused when the shutter release button is pressed directly. The use of a "self timer" (a timed release mechanism that automatically trips the shutter release after an interval of time) can serve the same purpose. Most modern single-lens reflex camera (SLR) have a mirror lock-up feature that eliminates the small amount of shake produced by the mirror flipping up.

Film grain resolution

[edit]

Black-and-white film has a "shiny" side and a "dull" side. The dull side is the emulsion, a gelatin that suspends an array of silver halide crystals. These crystals contain silver grains that determine how sensitive the film is to light exposure, and how fine or grainy the negative the print will look. Larger grains mean faster exposure but a grainier appearance; smaller grains are finer looking but take more exposure to activate. The graininess of film is represented by its ISO factor; generally a multiple of 10 or 100. Lower numbers produce finer grain but slower film, and vice versa.

Contribution to noise (grain)

[edit]Quantum efficiency

[edit]Light comes in particles and the energy of a light-particle (the photon) is the frequency of the light times the Planck constant. A fundamental property of any photographic method is how it collects the light on its photographic plate or electronic detector.

CCDs and other photodiodes

[edit]Photodiodes are back-biased semiconductor diodes, in which an intrinsic layer with very few charge carriers prevents electric currents from flowing. Depending on the material, photons have enough energy to raise one electron from the upper full band to the lowest empty band. The electron and the "hole", or the empty space where it was, are then free to move in the electric field and carry current, which can be measured. The fraction of incident photons that produce carrier pairs depends largely on the semiconductor material.

Photomultiplier tubes

[edit]Photomultiplier tubes are vacuum phototubes that amplify light by accelerating the photoelectrons to knock more electrons free from a series of electrodes. They are among the most sensitive light detectors but are not well suited to photography.

Aliasing

[edit]Aliasing can occur in optical and chemical processing, but it is more common and easily understood in digital processing. It occurs whenever an optical or digital image is sampled or re-sampled at a rate which is too low for its resolution. Some digital cameras and scanners have anti-aliasing filters to reduce aliasing by intentionally blurring the image to match the sampling rate. It is common for film developing equipment used to make prints of different sizes to increase the graininess of the smaller size prints by aliasing.

It is usually desirable to suppress both noises such as grain and details of the real object that are too small to be represented at the sampling rate.

See also

[edit]- Astrophotography

- Underwater photography

- Infrared photography

- Ultraviolet photography

- Silver bromide

- Photographic processing

- Image editing

- Highlight headroom

References

[edit]- ^ "Science of Photography". Photography.com. Archived from the original on Feb 13, 2008. Retrieved 2007-05-21.

- ^ Kirkpatrick, Larry D.; Francis, Gregory E. (2007). "Light". Physics: A World View (6 ed.). Belmont, California: Thomson Brooks/Cole. p. 339. ISBN 978-0-495-01088-3.

- ^ "Phase Fresnel: From Wildlife Photography to Portraiture | Nikon".

- ^ "Logitech Software".

- ^ Jones, Daniel (2003) [1917]. Peter Roach; James Hartmann; Jane Setter (eds.). English Pronouncing Dictionary. Cambridge: Cambridge University Press. ISBN 3-12-539683-2.

- ^

- "daguerreotype". Merriam-Webster.com Dictionary.

- "daguerreotype". Dictionary.com Unabridged (Online). n.d.

- "daguerreotype". The American Heritage Dictionary of the English Language (5th ed.). HarperCollins.

- ^ Tate. "C-print – Art Term". Tate. Retrieved 2020-08-16.

- ^ Gawain, Weaver; Long, Zach (2009). "Chromogenic Characterization: A Study of Kodak Prints 1942-2008" (PDF). Topics in Photographic Preservation. 13. American Institute for Conservation of Historic and Artistic Works: 67–78.

- ^ a b "Definitions of Print Processes - Chromogenic Print". Santa Fe, New Mexico: Photo-Eye Gallery. Retrieved October 28, 2017.

- ^ a b "Chromogenic prints". L’Atelier de Restauration et de Conservation des Photographies de la Ville de Paris. 2013. Retrieved November 12, 2017 – via Paris Photo.

- ^ Fenstermaker, Will (April 27, 2017). "From C-Print to Silver Gelatin: The Ultimate Guide to Photo Prints". Artspace. Retrieved November 13, 2017.

- ^ "TrekLens – JoBurg Skyline and Light Trails Photo". treklens.com. Retrieved 4 April 2010.